This post was contributed by Provar’s Chief Strategy Officer, Richard Clark.

In 2019, I wrote an article about why test automation didn’t need AI. My original article was even renamed Demystifying AI: What Does Artificial Intelligence Mean for Test Automation? as it was considered too controversial and for fear of alienating people.

At that time, my basis was the limited and misleading claims in the software industry about artificial intelligence. Companies were either inflating their valuation for investors or misleading customers about AI’s value in their products. Four years later, I no longer stand by that article.

Since I wrote that article, three major things have changed:

- The market and audience have generally learned the lesson of false idols when it comes to AI in testing and have become more educated about the actual value of AI. We now better understand Narrow/Weak AI and General/Strong AI.

- New tools with commercial licenses have come to market, especially in Conversational and Generative AI solutions. These tools offer practical uses of AI beyond fixing brittle element locators or improving user experiences. AI as a service now has real potential to build upon. Software companies no longer need to develop their machine-learning systems from scratch.

- Here at Provar, we moved our product suite beyond Salesforce test automation. We became a software quality vendor with integrated solutions, each with valuable benefits to our rapidly growing customer base. This includes using a new algorithm (via application intelligence using Salesforce metadata) for test case generation and leveraging the OpenAI ChatGPT API to suggest potential test scenarios from a user story. We also integrated, rather than rebuilt, existing test optimization machine learning and static code analysis tools.

At the same time, the hype around AI has never been more in the public domain. The consumer AI appetite was whetted first by voice assistants like Amazon’s Alexa, and most of us have one or more of these in our homes. Did they transform our lives? What have they replaced? And how has this heightened the demand for AI and intelligent capabilities across the board?

Intelligence on Artificial Intelligence

Is Alexa intelligent? No. Does it use AI? Absolutely. We’re seeing machine learning from voice recognition (natural language processing), personalization, and ” remembering” my favorite things.

The reality is that most voice assistants do use some AI. Still, they essentially automate searching the internet, filtering harmful material (most of the time). If you want a conversational dialog, tell it which skill you want it to apply. Once you exit that skill (like an AWS Lambda function used in a chatbot), you’ll be back at square one. The skill won’t remember the conversation unless programmed to do so. However, it does retain a record of it in the vendor’s database, likely collating and categorizing some data about you.

At the same time, we’ve seen a rise in driverless car technology, which many people see on the road daily. We’re yet to trust this technology enough to make it mainstream. Still, it’s only a matter of time, in my opinion, once we overcome the moral dilemmas (save the child in front or risk killing the driver). One of the other challenges to driverless cars is learning to deal with human drivers who have learned to bully them! Guess what – people are smart and have learning models, too.

We should also remember that all these machine learning systems are trained on accurate data, potentially teaching that rules aren’t always followed and not relying on algorithms alone. Humans are notoriously bad decision-makers, and data scientists help us find the line between good and bad training data.

Rise of the Co-Pilot Integration

These integrations’ benefits aren’t changes that mean we need fewer people to work. Instead, they’re simply co-pilots or assistants who can help us do more in less time. For example, an airline pilot in 2023 has a much easier time flying than one in 1923 or even 1993, as the plane does a lot of work for them.

It does this through a combination of computer automation (flight control), artificial intelligence (route planning, fuel saving), and engineering (hydraulics). When the plane’s computers get it wrong, it can spell disaster, so a human must override and generally take off and land the plane.

Tragically, the Boeing 737 MAX crashes were reportedly caused by a combination of factors. These included the pilot’s training conflicting with the flight software’s interpretation of faulty data. The computer pushed the nose of the aircraft down based on an incorrect stall warning. Additionally, the actions required by the pilot to override had changed from the previous aircraft model.

We also need people on the plane who will reassure us when things go wrong, take necessary safety actions, and deal with the chaotic behavior of other humans. Few of us are ready for computers to talk to computers about aircraft maintenance, refueling, or passenger safety. Pilots remain essential, not least because knowing how to land a plane under challenging conditions is much more complicated than flying straight and level for 9 hours.

Let me get back to the point. Voice assistants and driverless automobiles are transforming how people view AI from a death-wielding robot that wants to kill them to a convenience. The sudden public rise of Generative and Conversational AI tools is similar to Voice Assistants. One vendor caught the media’s attention first, and everyone else working hard on their AIs rushed to get attention. I’m in no position to judge which Generative AI is better, but we all know who was first to steal the headlines.

The value of the new generation of AIs is impressive, not just ChatGPT. For example, Microsoft swiftly acquired OpenAI and built GPT into its products in weeks, not months. Likewise, companies like Salesforce have announced their integration. They did this because it’s much easier than constructing a machine learning system and hiring a data scientist to train the right data.

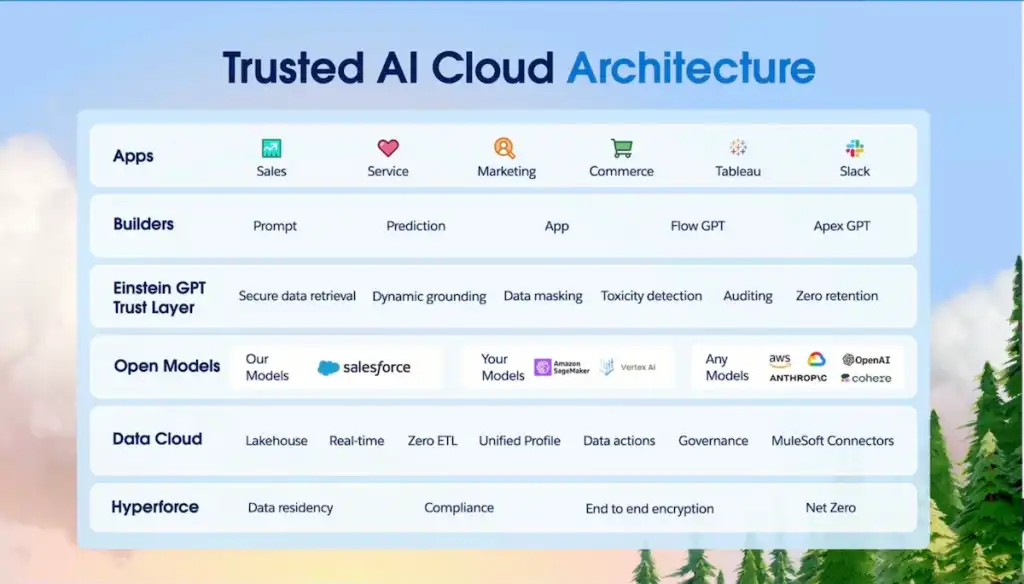

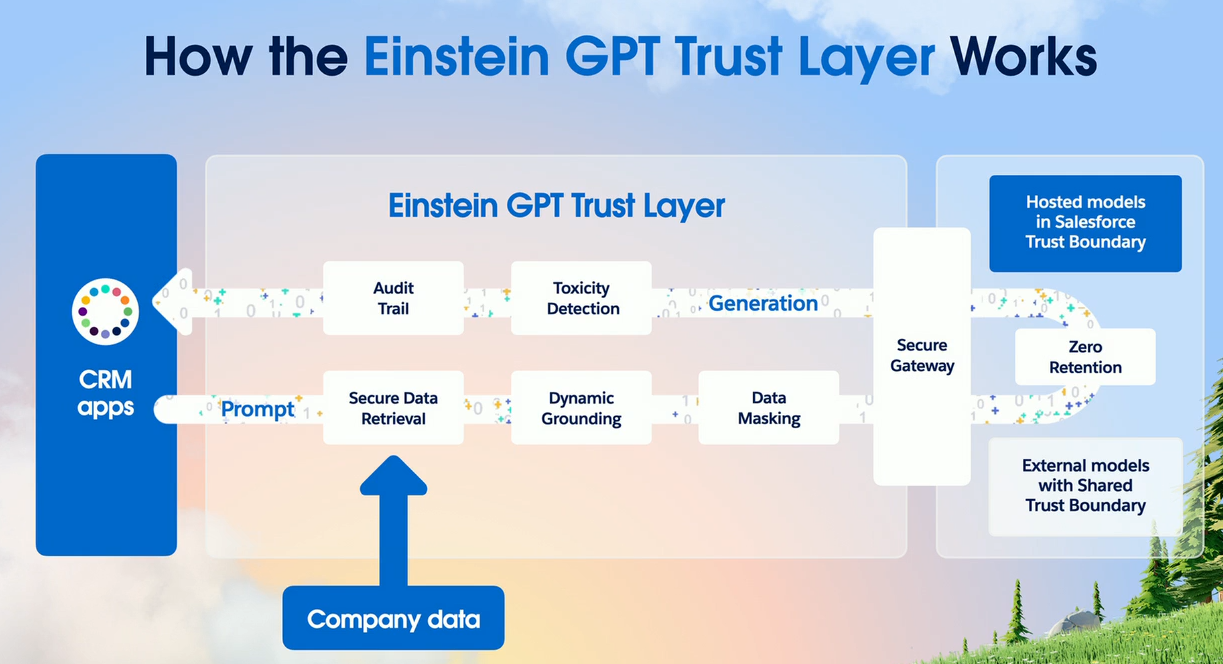

Salesforce appears to be getting right with its recent AI Cloud announcement by adding additional value. Their existing secure and trusted architecture enables the implementation of appropriate ethics and security. They achieve this by tokenizing conversations through data masking and checking for toxicity. Additionally, they maintain audit logs and utilize different AI models for various types of requests. The system can automatically ground the model for each customer using their application data or an externally hosted language model.

The Application of AI in Testing

Image-Based Testing

Allowing rapid verification of textual and visible information within graphics for user experience and accessibility analysis or solutions hard to test with traditional tools.

Scenario Generation

Verifying that sufficient test coverage has been achieved for the functionality based on your unique criteria, goals, and risks. We’ve started this with Provar Manager, and more will come soon.

Result Analysis

Summarize the trends and changes in test performance and results quickly and concisely so stakeholders can communicate. Collecting test results in Provar Manager will unlock the future opportunity to utilize Salesforce AI Cloud effectively.

Performance Optimization

Both in terms of orchestration of test execution to ensure defects are found as early as possible so they can be reworked and in terms of rewriting tests to improve test performance. Our near-future microservices will unlock background optimization and recommendations.

Intelligent Test Generation

Provar Automation already provides metadata-based test generation, which provides an initial level of coverage and a rapid return on investment. We want to extend this through AI to cover non-salesforce applications and align with user journeys and business processes.

At Provar, we’ve already delivered some of these initiatives through product development and partnerships and have even more on the horizon. Adopting AI tools within our business also leads to rapid productivity improvements. The title of this article came from ChatGPT, for example!

Beware of False Idols

Some AI-based test automation solutions can take months to train on a customer’s application by monitoring user actions, or they can gather data from numerous clients. Either way, being able to react immediately to changes without waiting for the model to re-train is beyond either means.

In addition, I’ve seen a lot of “AI Washing” by vendors desperate to exhibit AI that doesn’t address ethics, employ AI, or offer user value.

I recently read a book by UCL Mathematics Professor Hannah Fry called Hello World. She discusses the ethical challenges of AIs and the differences between an algorithm and an artificial intelligence, specifically a machine learning solution. Two quotes from her, in particular, stand out for me:

“People sometimes just see the word ‘AI’ and it’s all sparkly and magical. It can make them forget about all of the other important things that have to go alongside it.”

– Simon Brook interviewing Hannah Fry

“Whenever we use an algorithm – especially a free one – we need to ask ourselves about the hidden incentives. Why is this app giving me all this stuff for free? What is this algorithm really doing? Is this a trade I’m comfortable with? Would I be better off without it?”

– Hannah Fry, Hello World: Being Human in the Age of Algorithms

My favorite quote, however, is this one she uses to assess whether claims about AI are bogus, her so-called “magic” test. Apologies for the language, but I feel it’s more impactful to leave uncensored:

“If you take out all the technical words and replace them with the word ‘magic’ and the sentence still makes grammatical sense, then you know that it’s going to be bollocks.”

– Hannah Fry, Hello World: Being Human in the Age of Algorithms

Conclusion

Future blog posts will discuss this topic and explore how certain test automation systems encourage AI-driven solutions. This distinction is crucial, as they only leverage intelligent capabilities. Still, for now, I’ll close with this.

AI has unmistakable value, but the people element will remain, even when it comes to AI for software quality. AI isn’t a “magic” solve-all, so beware of solutions framing themselves as such. Embrace AI tools, use them securely and ethically, ensure they benefit your work practices, and measure their impact on productivity or quality.

Interested in learning more about how Provar uses intelligent capabilities in its solutions? Connect with us today!